Unique Info About How To Build Spark

Df = spark.read.csv('.csv') read multiple csv files into one dataframe by providing a list.

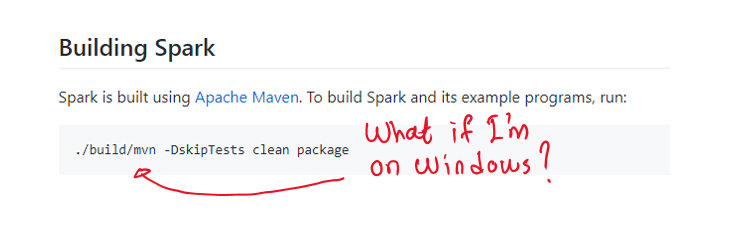

How to build spark. This means the time it will take is 950 spark hours / 1 spark = 950 hours (appx. For instance, you can build the spark streaming module using: For instance, you can build the spark streaming module using:

Building spark using maven requires maven 3.0.4 or newer and java 6+. Building spark with java 7 or later can create jar files that may not be readable with early versions of java 6,. How to build apache spark data pipeline?

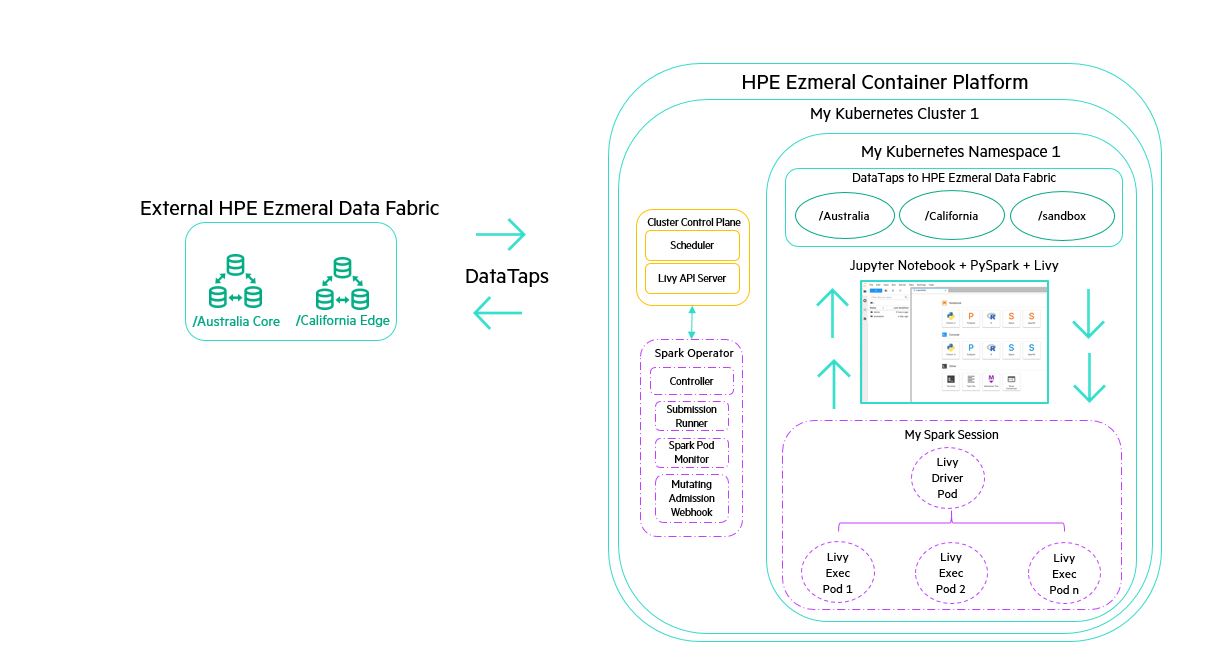

Spark’s architecture on kubernetes from their documentation. This blog will show you how to use apache spark native scala udfs in pyspark, and gain a significant performance boost. Building a fat jar file.

Kubectl create serviceaccount spark kubectl create. Scala is the spark's native language, and hence we prefer to write spark. A data pipeline is a piece of software that collects data from various sources and organizes it so that it can be used strategically.

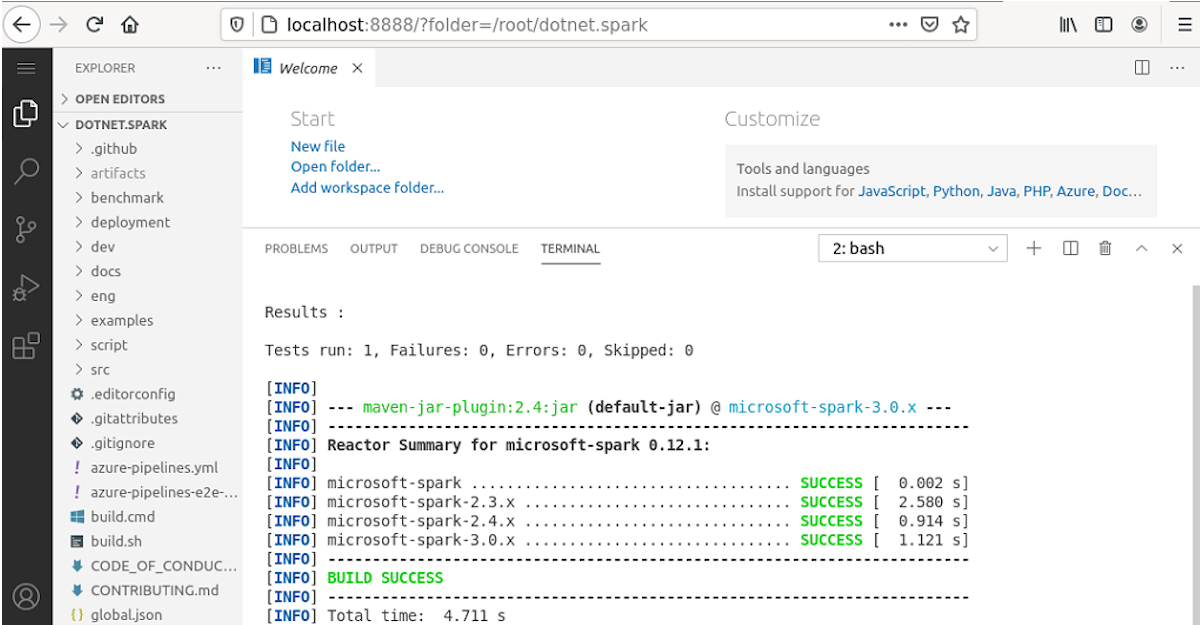

These steps will help in understanding the overall building process for any.net for spark application. Due to range and nature of spark, even content that outstrips. This build is an excellent league starter, and requires very little investment to start rolling over maps and bosses.

For example, machine learning (ml), extract. To a certain extent, everything in development may be represented as a data pipeline. Tl;dr we need to create a service account with kubectl for spark: